Taylor Swift’s Endorsement Is A part of the AI Backlash

Photograph-Illustration: Intelligencer

Swift, greater than almost anybody else on the planet, has private causes to fret about impersonation and misuse of her likeness by way of AI. She’s extremely well-known, and her phrases carry weight for many individuals. As such, in relation to AI, her expertise has been each futuristic and dystopian. Like many celebrities – however particularly ladies — she already lives in a imaginative and prescient of AI hell, the place the voices, faces, and our bodies of well-known individuals are digitally cloned and used for scams, hoaxes, and porn. (Final yr, express and abusive deepfakes of Taylor Swift have been extensively shared on X, which took the higher a part of per week to sluggish their unfold.) Her endorsement might be understood as an try and reclaim and assert her identification — in addition to her massively worthwhile model — within the context of a brand new type of focused identification theft. “The only solution to fight misinformation is with the reality,” she writes. (Don’t learn Elon Musk’s response.)

Swift, greater than almost anybody else on the planet, has private causes to fret about impersonation and misuse of her likeness by way of AI. She’s extremely well-known, and her phrases carry weight for many individuals. As such, in relation to AI, her expertise has been each futuristic and dystopian. Like many celebrities – however particularly ladies — she already lives in a imaginative and prescient of AI hell, the place the voices, faces, and our bodies of well-known individuals are digitally cloned and used for scams, hoaxes, and porn. (Final yr, express and abusive deepfakes of Taylor Swift have been extensively shared on X, which took the higher a part of per week to sluggish their unfold.) Her endorsement might be understood as an try and reclaim and assert her identification — in addition to her massively worthwhile model — within the context of a brand new type of focused identification theft. “The only solution to fight misinformation is with the reality,” she writes. (Don’t learn Elon Musk’s response.)

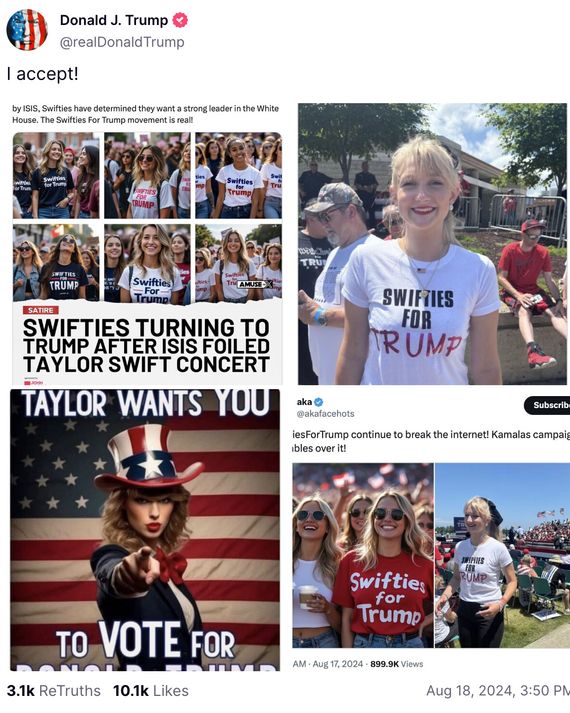

The factor in regards to the false endorsement to which she’s responding, although, is that it in all probability didn’t have to be refuted. On Reality Social, Donald Trump shared a collage of trollish posts and AI-generated pictures: two of them had a photograph of the identical girl; one nonetheless had a visual “satire” tag; and one other, whereas clearly generated by AI, was rendered within the model of an illustration. It’s ridiculous.

Photograph-Illustration: Reality Social

But it surely’s ridiculous in a specific method that’s develop into miserably acquainted in recent times. It’s not fairly a joke — extra of a taunt — and it’s definitely not an argument, or introduced or understood as proof. It’s a back-filled fantasy for partisans who’re keen to imagine something. Additionally, and maybe largely, it’s a instrument of mockery for trolls, together with the previous President himself, who posts MAGA AI nonsense in the way in which he says and posts a lot of clearly unfaithful issues: to say, mainly, What are you gonna do about it?

I don’t wish to argue that no person may probably fall for one thing like this, and I’m certain there are not less than a number of individuals on the planet who’re strolling round with the mistaken impression that Taylor Swift launched a recruiting poster for the Trump-Vance marketing campaign (I’m almost as certain that these individuals have lengthy identified precisely who they have been voting for). I additionally don’t wish to argue {that a} correction-via-endorsement like that is mistaken or pointless: Whereas these AI-generated pictures are higher understood as implausible tossed-off lies than crafty deepfakes — extra akin to Donald Trump saying “Taylor Swift followers, the attractive Swifties, they arrive as much as me on a regular basis…” at a rally than to a disinformation operation — they’re nonetheless getting used, in unhealthy religion, to inform a narrative that isn’t true (and one which had the final word impact of elevating the salience of a musician’s political endorsement that was nearly inevitably going the opposite method). Think about the kind of faux superstar porn that has proliferated on-line over the past decade: In lots of circumstances, these pictures aren’t creating the impression that public figures have had intercourse on digital camera, however are fairly stealing their likenesses to create and distribute abusive, non-consensual fantasies. That is no much less extreme of an issue than deepfakes as mis-or-disinformation, however is an issue of a barely totally different form.

As AI instruments develop into extra succesful, and get used in opposition to increasingly more individuals, this distinction may disappear, and there are many contexts during which deepfakes and voice cloning are already used for simple deception. For now, although, in relation to bullshit generated by and about public figures, AI is much less efficient for tricking individuals than it’s for humiliation, abuse, mockery, and fantastical want achievement.

It’s good for telling the types of lies, now within the type of pictures and movies, which can be stubbornly proof against correction as a result of no person thought they have been strictly true within the first place. Swift’s put up is typical of the favored AI backlash in that it’s each anticipatory — these things certain looks like it may go catastrophically incorrect sometime! — and reacting to one thing much less extreme that’s already taking place — hey, this shit sucks, I want it will cease! It’s an affordable response to a half-baked expertise that isn’t but deceiving (or in any other case reshaping) the world at scale however within the meantime appears awfully properly suited to make use of by liars and frauds. Her expertise is aligned with that of most people, for whom theories of mass automation by AI are nonetheless largely summary. For now they simply see the web filling to the brim with AI slop.

There are echoes right here of the talk over disinformation within the 2020 and particularly 2016 elections, when the sheer visibility of galling nonsense on social media, mixed with proof that international governments have been serving to to distribute a few of it, created the impression, or not less than raised the likelihood, that “faux information” might be swinging voters. It was an ill-defined downside that was distressing within the what-if-there’s-no-shared-reality sense but in addition kind of comforting, in that it shrunk the extra intractable issues of “mendacity” and “individuals believing no matter they need” into tech-product-sized optimization and moderation points. (Proof that Fb helped elect Trump is skinny, however for liberals, Fb’s apparent synergies with MAGA motion, and the dissemination of a lot of actually unbelievable stuff, have been definitely an indictment of the product and maybe social media as a complete.) Trump’s rapidly re-posted faux Swifties, and Swift’s nuclear response, supply a glimpse of how, this time round, a brand new set of instruments for producing and distributing bullshit would possibly truly work together with standard politics: first as farce, then as brutal backlash.